Smartphones have long become ubiquitous, but mobile technology is still advancing faster than many other sectors of consumer technology. That is especially true for the camera modules, where manufacturers surprise us with new features, designs, and spec improvements with every new-generation device.

At DXOMARK we are keeping a close eye on these advancements so we can adjust and modify our testing protocols and methodologies accordingly and make sure that DXOMARK remains the most relevant smartphone camera testing out there. We launched smartphone camera tests in 2012 and released the first update to our protocol in 2017, adding dedicated tests for simulated bokeh modes and zoom quality, as well as for low-light testing down to 1 lux and motion-based test scenes. In September 2019, we introduced the Wide and Night scores to take into account the increased availability of ultra-wide cameras on smartphones as well as improved low-light and night shooting capabilities.

One year later and it’s time for another update to the DXOMARK smartphone main camera protocol. In version 4 we are adding tests for image quality in the camera preview and what we call trustability. Trustability is designed to make our Camera test protocol more exhaustive and challenging. It brings more use cases and an updated scoring system for increased user relevance. We have also improved the testing methodology for autofocus, with new objective lab tests in low light, for handheld shooting, and under HDR conditions.

Trustability for Photo

Even the most modern and high-end smartphone cameras can deliver excellent results in one test scene, only to fail in a similar but different scene. In other words, just because a camera does well in some situations does not mean you can trust it to do well all the time. At the same time, as smartphone camera quality has improved over the years, users are now using their phones in any kind of photographic situation, even under difficult conditions that would previously have required a DSLR or mirrorless camera, such as shooting low-light scenes or fast-moving subjects. It’s therefore absolutely critical for smartphone cameras to provide good image quality on a consistent basis across all scenes.

This is why we have introduced trustability. Trustability measures the camera’s ability to deliver consistent still image and video quality across all shooting scenarios, not just a finite number of selected test scenes. It makes the DXOMARK Camera protocol both more exhaustive and more challenging.

More still image sample scenes

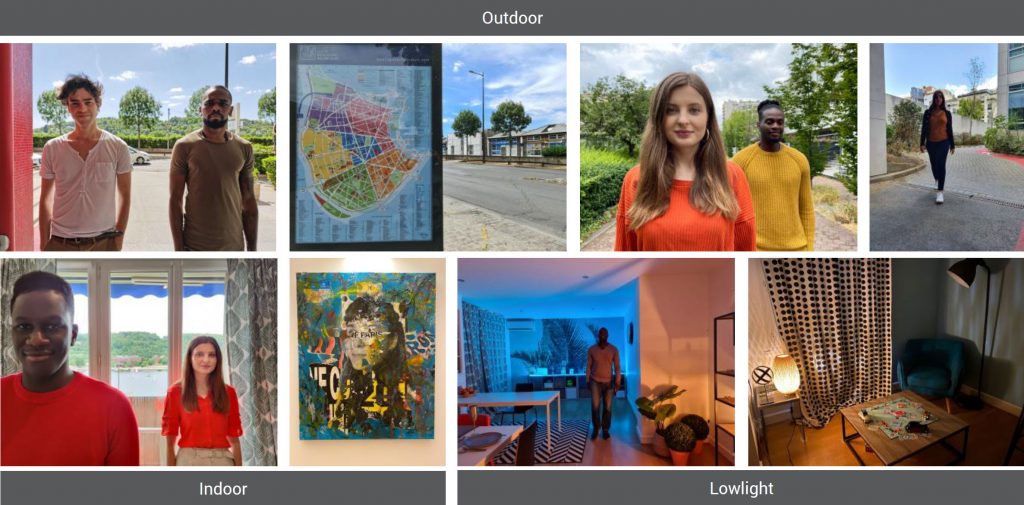

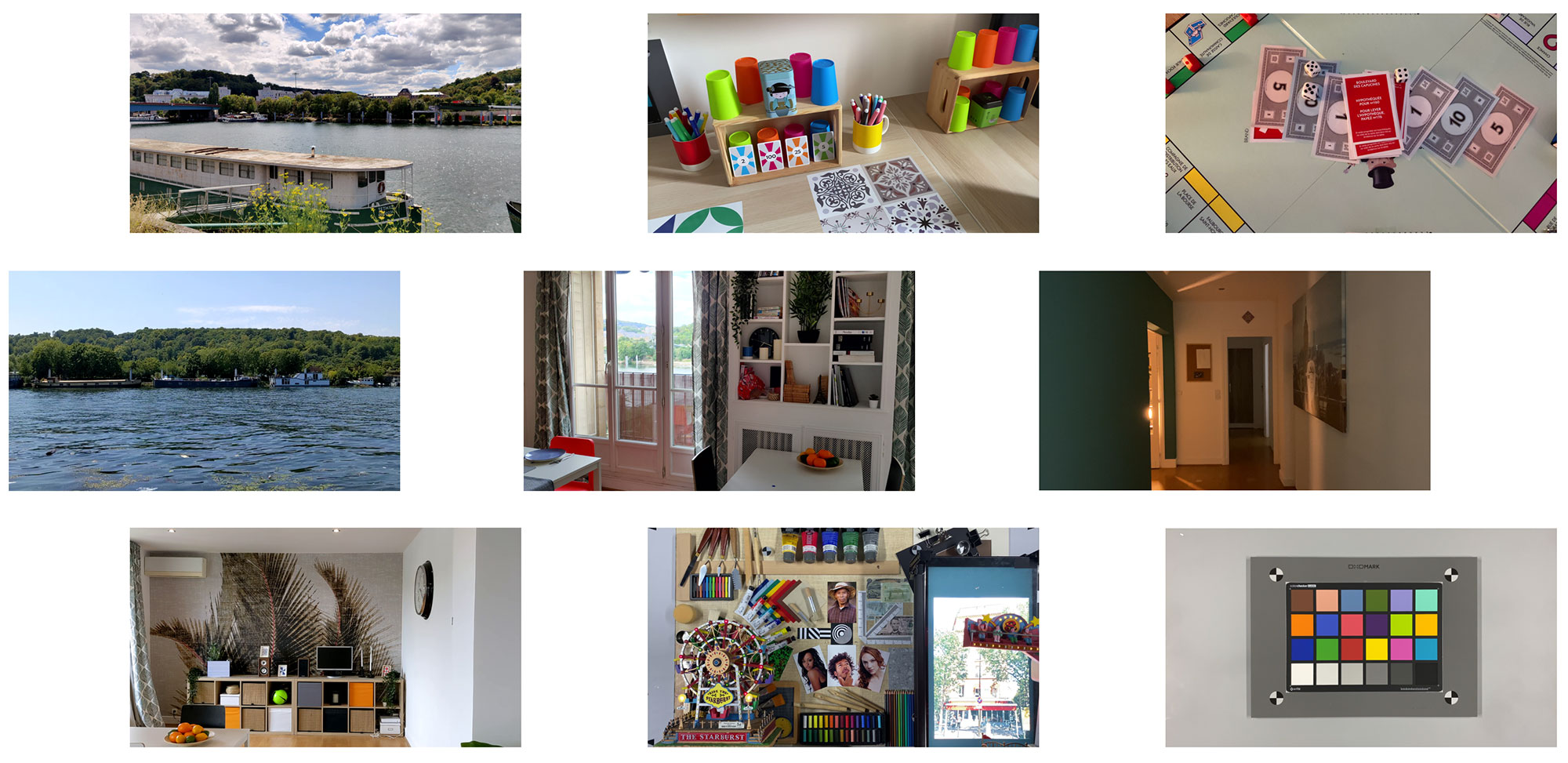

To implement trustability, we had to expand our current set of sample scenes and images. We have introduced a wider range of new photo use cases that cover portrait, multi-plane, close-up, and moving scenes across all light conditions. There are also entirely new sample sets for portrait and low-light shooting. Below you can see a selection of our new test scenes.

Trustability looks at all aspects of image quality, such as exposure, color, texture, noise, and focus. Let’s have a look at some examples of our new scenes:

People scenes like the one below are designed to let us check if a camera is capable of delivering accurate face exposure, natural rendering of various skin tones, and good rendering of fine detail on facial features. All these attributes are important in any portrait shot, including family snapshots, pictures of children, and typical vacation photos.

In this comparison we can see that portrait rendering can vary a lot among cameras. This is especially true for the tradeoff between texture preservation and noise reduction, but also in terms of exposure. For example, the iPhone captures much brighter skin tones here than the Xiaomi. However, the latter has strong highlight clipping in the sky in the background.

We’ve also added scenes with moving subjects. This helps us evaluate the preservation of sharp details on moving subjects. This is still a challenge for any camera, as exposure times have to be as short as possible to avoid motion blur, but on the flip side can result in a poor texture/noise tradeoff, especially in indoor conditions and low light. In addition, moving subjects are a challenge for the autofocus system, which has to track the subject. These attributes are relevant for a range of photographic use cases, including family, street, sports, and animal photography.

We are using more HDR portrait and landscape scenes to check how good cameras are at preserving both highlight and shadow detail in difficult high-contrast lighting conditions. HDR capabilities are important in pretty much any kind of photography, including vacation shots, architecture, landscape, portraiture, and interiors. As you can see in the comparison below, results can vary quite drastically.

We use complex multiplane scenes, such as group portraits or still life closeups, to evaluate the reliability of autofocus systems. In addition to autofocus accuracy, we check consistency over consecutive shots, and the depth of field. In the images below, both the Apple and Huawei devices focus on the foreground subject, but the Sony focuses on the person in the background. The Xperia 1 offers pretty wide depth of field, though, so the front subject is still rendered pretty sharp.

We have also expanded our selection of low-light scenes, which are designed to test the camera’s ability to deliver good exposure while at the same time preserving a decent texture/noise tradeoff. Use cases include photographs taken during dinner, night photography, interior shots, and low-light still lifes.

Randomized shooting plan

In addition, we have now added a random element to what we call the shooting plan (which is all the sample images we shoot for a review). These random scenes reward those devices that can produce consistently good results in any condition, and they help diversify testing even more.

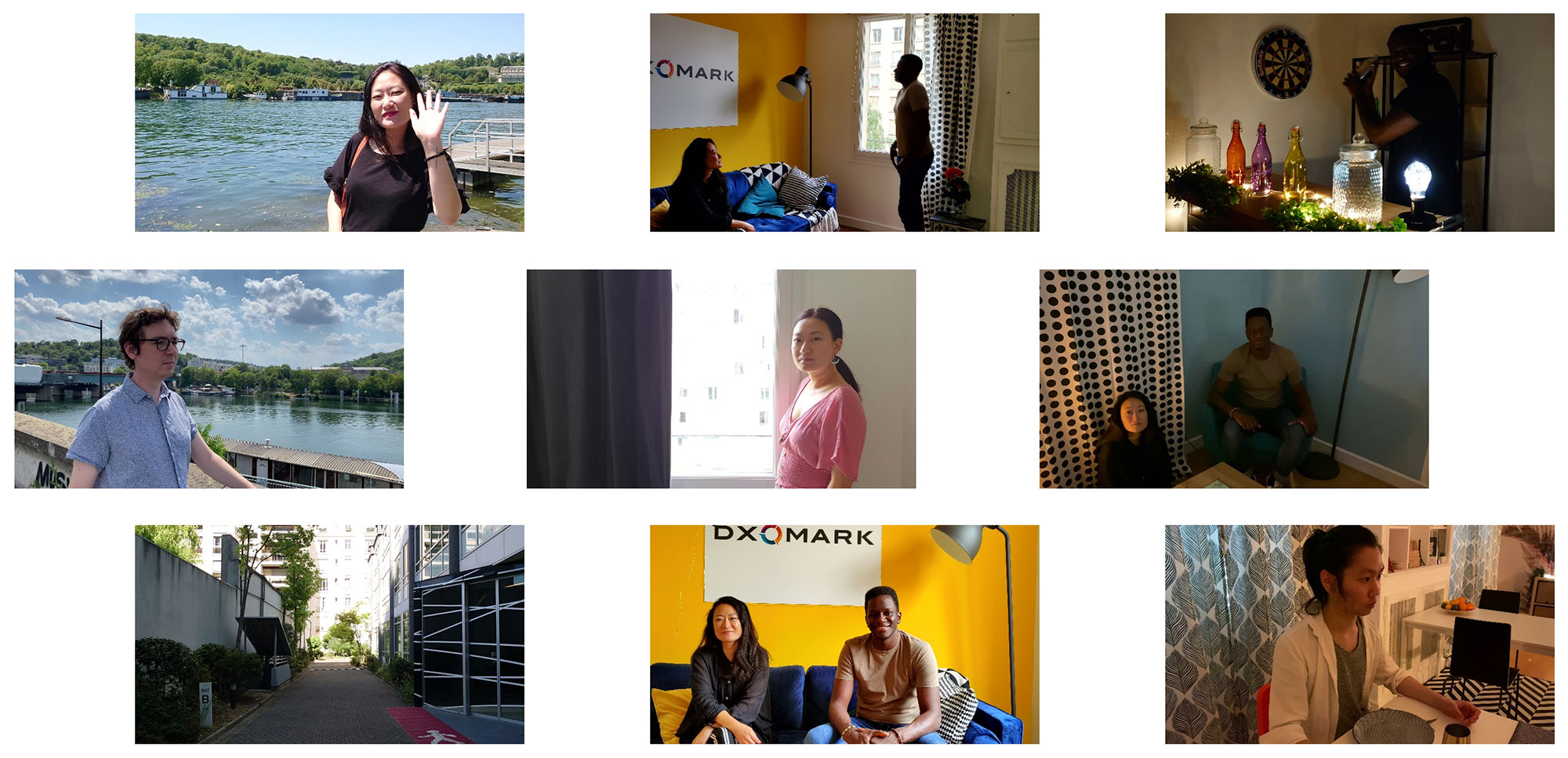

In addition to all our precisely-defined sample scenes that we capture for every test, we now also shoot a series of samples that are defined only in terms of composition, scene content, and lighting conditions, but not in terms of location, subject, or framing. For example, such a scene could be a backlit portrait or a low-light cityscape. Our testers then capture an image at any location and with any subject that fulfills the defined requirements.

Let’s have a look at a couple of examples:

The “people on sofa” random scene has to meet the following conditions: two motionless subjects, low-contrast indoor lighting conditions, and a wide range of colors. Within those parameters, the DXOMARK testers are free to capture whatever scene they want. All three images below comply the requirements and could therefore be used in the test to evaluate color, exposure, and artifacts, among other attributes.

The “flowers” random scene has to be captured under outdoor lighting and has to be a still-life image as well a close-up with some color elements. Like for the indoor portrait above, the testers are free to capture any scene that meets these requirements. Any of the three images below would do the job of helping evaluate color and autofocus.

To introduce trustability into the new protocol and scoring system, we updated all tests that are undertaken in the camera’s default photo auto mode (exposure, color, texture, noise, artifacts, and autofocus). We also introduced new perceptual measurements and modified the computation of the overall score to take the new elements into account.

More low-light and HDR scenes, more challenging motion for Video

Trustability has of course also brought improvements to our video testing, offering a much wider range of tests overall. While all of our objective lab testing has largely remained unchanged, we have added more use cases, low-light and HDR scenes, and portrait and closeup videos, as well as more challenging motion (of both photographer and subjects) to our perceptual video testing. This makes our video test protocol both more complete and more challenging. Like for stills, smartphone cameras also have to deliver consistently good image quality across a wide range of conditions and scenes in video mode in order to achieve high scores.

To integrate trustability into our video testing, we have also modified the video scoring system for all attributes (exposure, color, texture, noise, artifacts, autofocus, and stabilization) as well as the computation of the overall score.

Other new videos include landscape shots, interiors, and closeups for perceptual analysis.

These new scenes make the new version of the video protocol more challenging in pretty much all test categories, including exposure, color and skin tones, autofocus and video stabilization.

For example, this backlit portrait scene tests the HDR capabilities of a camera as well as its exposure strategy. As you can see, results differ vastly between devices. The Sony Xperia 1 prioritizes detail in the bright background, strongly underexposing the darker foreground. As a result, it renders the subject of the portrait pretty much invisible. The Samsung Galaxy Note 10+ 5G goes the other way and exposes for the subject. This is a better strategy than the Sony’s, but results in a strongly overexposed background. The Google Pixel 4 strikes the best balance in this instance, but still isn’t quite as good as we would like, with strong underexposure on the subject.

HDR capabilities are also tested with this landscape scene. In these samples, the sky is rendered quite differently by the three comparison devices. The Pixel 4 captures the brightest sky, with fairly large areas of highlight clipping, but also shows some clipping in the shadows. The Huawei P40 Pro protects the highlights better, delivering a fairly natural result. The Apple pulls the highlights back even further, thus avoiding any clipping, but the sky ends up looking a touch unnatural.

We also have new video scenes that are designed to test the temporal aspect of exposure. In this moving portrait below, the overall exposure is good on both subject and background, but it changes slightly between frames, making for an unnatural effect. This is also one of several new outdoor and indoor scenes that we can use to check for white balance casts and instabilities.

Other new test scenes help us evaluate the autofocus performance more comprehensively in terms of tracking, convergence speed, and smoothness, as well as for closeup capabilities.

Finally, we have also made our video stabilization testing more challenging by including a scene that records a video while running. Up to now we had been testing stabilization only while walking. The higher speed and stronger vibrations during running take even the best stabilization systems to their limits.

Preview

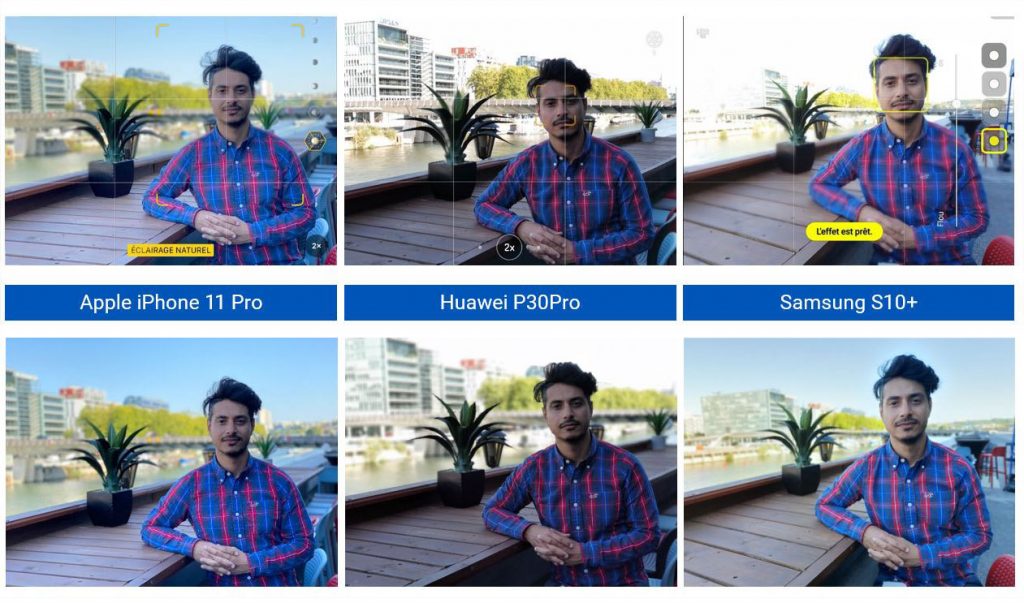

Up to now our smartphone camera reviews have focused on image recording. However, the performance of a camera app’s preview mode gives you a very first impression of image quality and can have a big impact on image results as well. For example, it’s impossible to get the framing of a shot right if preview framing does not align with the framing of the captured image. Similarly, a preview image that is too bright or dark might make you necessarily apply exposure compensation. In the worst-case scenario, a photographer might simply choose not capture an image at all if the preview looks like it’s not worth pressing the shutter button, even though the recorded image would be absolutely fine.

Among the current generation of devices, preview performance varies quite significantly. In the sample scene below, the Samsung Galaxy S10+ shows the biggest difference between the preview and the final image. Looking at the preview image, you might not even bother pressing the shutter button because of the extensive highlight clipping in the background. However, the camera applies effective HDR processing to the final actual image, which in total contrast to the preview, shows decent color and detail in the background.

Much of the background in the Huawei P30 Pro preview image is clipped as well, but the P30 Pro does not apply any HDR processing to the output image. While the output isn’t great, at least the preview is fairly accurate. The iPhone 11 Pro arguably does the best job in this comparison. It uses HDR processing on the actual output image and gives us a fairly accurate preview of the end result on its display.

This is why DXOMARK has added Preview to its camera test attributes. Preview’s main task is to check if what you see on the preview screen is what you get in the final image. For example, HDR processing and bokeh simulation should be applied to the preview image in exactly the same way as to the final image. In addition, we check preview framing and how smoothly the zoom operates.

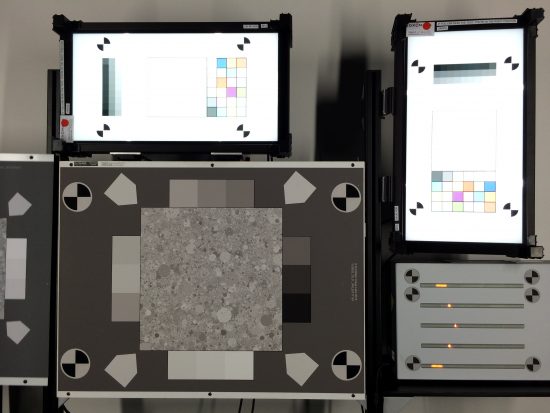

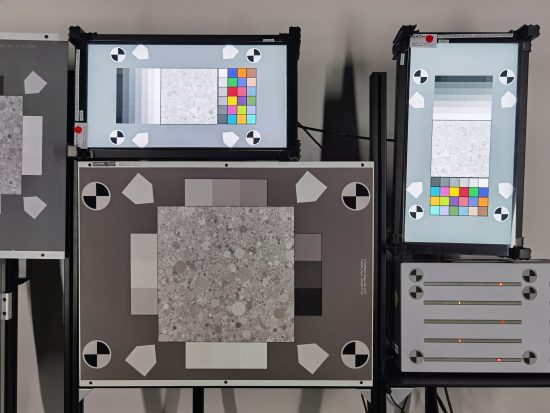

All preview analysis is undertaken perceptually while looking at the preview and output images side-by-side. For HDR testing, we use a specific setup that includes a mannequin and an Analyzer HDR slide. Our testers look at target exposure on the mannequin’s face and entropy on the slide for EV+4 and EV+7. We undertake HDR testing for preview at three light levels, 1000 lux D65, 100 lux TL84, and 20Lux A.

For zoom preview tests, we use the zoom buttons in the camera app and the pinch–zoom gesture to go from the widest to the longest zoom setting, and film the resulting preview video on the display of the device. Our testers then analyze the footage, looking out for any jumps, stepping, or other inconsistencies in terms of exposure, color, and field of view.

Other updates

Trustability and Preview are the core elements of version 4 of the DXOMARK Camera test protocol, but there is a range of additional updates, mainly to our objective testing methodologies in a controlled lab environment, but also to some of our perceptual testing methods in real-life scenes.

New objective autofocus measurements

Previously, the lowest light level we used for objective autofocus testing in the lab was 20 lux. We now also test autofocus at the extremely low light level of 5 lux. There are also anew objective autofocus tests under high dynamic range conditions and with simulated handheld motion (with the device mounted on a motion platform) to replicate even more use cases and shooting situations in the lab.

New HDR measurements

Previously our objective testing in the lab was undertaken under homogeneous light conditions, with high dynamic range scenes covered by our real-life shooting and perceptual evaluation. Now we have expanded our objective tests to include HDR recording as well.

Expanded zoom testing

Finally, with new devices offering ever-expanding zoom ranges and quality, we have modified our zoom tests accordingly. We previously undertook zoom testing to cover zoom factors of approximately 2x to 8x. We have now expanded zoom tests to deliver results across a wider zoom range from approximately 1.5x to 10x and beyond.

We have also improved our resolution measurements for zoom; further, our testing setup is now fully automated as well as more difficult for the autofocus. Our experts apply a strong defocus between shots and then the shutter is automatically triggered one second later, which is a real challenge for any smartphone camera when zooming. In addition, we updated our shooting plan with more portrait and HDR scenes as well as other complex uses cases for zoom testing, and we introduced a new AI measurement on our perceptual chart for texture evaluation.

DXOMARK invites our readership (you) to post comments on the articles on this website. Read more about our Comment Policy.